클라우드 배우기

EKS EFS 클러스터 pod ContainerCreating 해결 본문

이전 글

https://hiheey.tistory.com/169

EKF EFS 구성

EFS : 여러 가용 용역에서 접근 가능한 파일 스토리지. 때문에 보안 그룹이 생성 필요 efs.tf resource "aws_efs_file_system" "kang" { creation_token = "kang-efs" tags = { Name = "kang-efs" purpose = "hands-on" } } resource "aws_sec

hiheey.tistory.com

진행 yaml

values.yaml

# Default values for game2048.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

appName: "game2048"

replicaCount: 3

image:

repository: 194453983284.dkr.ecr.ap-northeast-2.amazonaws.com/myreca

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart appVersion.

tag: "FE9"

imagePullSecrets: []

nameOverride: ""

fullnameOverride: ""

podAnnotations: {}

maxSurge: 34%

maxUnavailable: 0%

service:

type: ClusterIP

port: 80

ingress:

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/load-balancer-name: kanga-alb

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS": 443}]'

alb.ingress.kubernetes.io/healthcheck-path: /

alb.ingress.kubernetes.io/subnets: subnet-05d15ee5dd82f7ba6, subnet-00d012c877208194c

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:ap-northeast-2:194453983284:certificate/3497f02e-c348-4ee3-a598-1fd20b239e1b

ingressRule:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: game2048

port:

number: 80

tls:

- hosts:

- 1jo10000jo.link # 실제 도메인으로 교체

secretName: game2048-acm-tls-secret # 임의의 시크릿 이름으로 지정

resources:

# We usually recommend not to specify default resources and to leave this as a conscious

# choice for the user. This also increases chances charts run on environments with little

# resources, such as Minikube. If you do want to specify resources, uncomment the following

# lines, adjust them as necessary, and remove the curly braces after 'resources:'.

limits:

cpu: 1000m

memory: 256Mi

requests:

cpu: 1000m

memory: 256Mi

autoscaling:

enabled: true

minReplicas: 2

maxReplicas: 10

targetCPUUtilizationPercentage: 20

# targetMemoryUtilizationPercentage: 80

nodeSelector:

nodeType: service-2023

tolerations:

- key: service

operator: "Equal"

value: "true"

effect: "NoSchedule"

affinity: {}

pvc:

name: efs-claim

accessModes:

- ReadWriteMany

storageClassName: aws-efs-sc

storage: 2Gi

chart.yaml

apiVersion: v2

name: game2048

description: A Helm chart for Kubernetes

# A chart can be either an 'application' or a 'library' chart.

#

# Application charts are a collection of templates that can be packaged into versioned archives

# to be deployed.

#

# Library charts provide useful utilities or functions for the chart developer. They're included as

# a dependency of application charts to inject those utilities and functions into the rendering

# pipeline. Library charts do not define any templates and therefore cannot be deployed.

type: application

# This is the chart version. This version number should be incremented each time you make changes

# to the chart and its templates, including the app version.

# Versions are expected to follow Semantic Versioning (https://semver.org/)

version: 0.1.0

# This is the version number of the application being deployed. This version number should be

# incremented each time you make changes to the application. Versions are not expected to

# follow Semantic Versioning. They should reflect the version the application is using.

# It is recommended to use it with quotes.

appVersion: "1.16.0"

helpers.tpl

{{/*

Expand the name of the chart.

*/}}

{{- define "game2048.name" -}}

{{- default .Chart.Name .Values.nameOverride | trunc 63 | trimSuffix "-" }}

{{- end }}

{{/*

Create a default fully qualified app name.

We truncate at 63 chars because some Kubernetes name fields are limited to this (by the DNS naming spec).

If release name contains chart name it will be used as a full name.

*/}}

{{- define "game2048.fullname" -}}

{{- if .Values.fullnameOverride }}

{{- .Values.fullnameOverride | trunc 63 | trimSuffix "-" }}

{{- else }}

{{- $name := default .Chart.Name .Values.nameOverride }}

{{- if contains $name .Release.Name }}

{{- .Release.Name | trunc 63 | trimSuffix "-" }}

{{- else }}

{{- printf "%s-%s" .Release.Name $name | trunc 63 | trimSuffix "-" }}

{{- end }}

{{- end }}

{{- end }}

{{/*

Create chart name and version as used by the chart label.

*/}}

{{- define "game2048.chart" -}}

{{- printf "%s-%s" .Chart.Name .Chart.Version | replace "+" "_" | trunc 63 | trimSuffix "-" }}

{{- end }}

{{/*

Common labels

*/}}

{{- define "game2048.labels" -}}

helm.sh/chart: {{ include "game2048.chart" . }}

{{ include "game2048.selectorLabels" . }}

{{- if .Chart.AppVersion }}

app.kubernetes.io/version: {{ .Chart.AppVersion | quote }}

{{- end }}

app.kubernetes.io/managed-by: {{ .Release.Service }}

{{- end }}

{{/*

Selector labels

*/}}

{{- define "game2048.selectorLabels" -}}

app.kubernetes.io/name: {{ include "game2048.name" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

{{- end }}

{{/*

Create the name of the service account to use

*/}}

{{- define "game2048.serviceAccountName" -}}

{{- if .Values.serviceAccount.create }}

{{- default (include "game2048.fullname" .) .Values.serviceAccount.name }}

{{- else }}

{{- default "default" .Values.serviceAccount.name }}

{{- end }}

{{- end }}

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ .Values.appName }}

namespace: {{ .Release.Namespace }}

labels:

{{- include "game2048.labels" . | nindent 4 }}

spec:

{{- if not .Values.autoscaling.enabled }}

replicas: {{ .Values.replicaCount }}

{{- end }}

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: {{ .Values.maxSurge }}

maxUnavailable: {{ .Values.maxUnavailable }}

selector:

matchLabels:

{{- include "game2048.selectorLabels" . | nindent 6 }}

template:

metadata:

{{- with .Values.podAnnotations }}

annotations:

{{- toYaml . | nindent 8 }}

{{- end }}

labels:

{{- include "game2048.selectorLabels" . | nindent 8 }}

spec:

{{- with .Values.imagePullSecrets }}

imagePullSecrets:

{{- toYaml . | nindent 8 }}

{{- end }}

containers:

- name: {{ .Chart.Name }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

ports:

- name: http

containerPort: {{ .Values.service.port }}

protocol: TCP

resources:

{{- toYaml .Values.resources | nindent 12 }}

{{- with .Values.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

hpa.yaml

{{- if .Values.autoscaling.enabled }}

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: {{ include "game2048.fullname" . }}

labels:

{{- include "game2048.labels" . | nindent 4 }}

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: {{ include "game2048.fullname" . }}

minReplicas: {{ .Values.autoscaling.minReplicas }}

maxReplicas: {{ .Values.autoscaling.maxReplicas }}

metrics:

{{- if .Values.autoscaling.targetCPUUtilizationPercentage }}

- type: Resource

resource:

name: cpu

targetAverageUtilization: {{ .Values.autoscaling.targetCPUUtilizationPercentage }}

{{- end }}

{{- if .Values.autoscaling.targetMemoryUtilizationPercentage }}

- type: Resource

resource:

name: memory

targetAverageUtilization: {{ .Values.autoscaling.targetMemoryUtilizationPercentage }}

{{- end }}

{{- end }}

ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: {{ .Values.appName }}

namespace: {{ .Release.Namespace }}

labels:

{{- include "game2048.labels" . | nindent 4 }}

{{- with .Values.ingress.annotations }}

annotations:

{{- toYaml . | nindent 4 }}

{{- end }}

spec:

{{- with .Values.ingress.ingressRule }}

rules:

{{- toYaml . | nindent 4 }}

{{- end }}

Notes.txt

1. Get the application URL by running these commands:

{{- if .Values.ingress.enabled }}

{{- range $host := .Values.ingress.hosts }}

{{- range .paths }}

http{{ if $.Values.ingress.tls }}s{{ end }}://{{ $host.host }}{{ .path }}

{{- end }}

{{- end }}

{{- else if contains "NodePort" .Values.service.type }}

export NODE_PORT=$(kubectl get --namespace {{ .Release.Namespace }} -o jsonpath="{.spec.ports[0].nodePort}" services {{ include "game2048.fullname" . }})

export NODE_IP=$(kubectl get nodes --namespace {{ .Release.Namespace }} -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORT

{{- else if contains "LoadBalancer" .Values.service.type }}

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get --namespace {{ .Release.Namespace }} svc -w {{ include "game2048.fullname" . }}'

export SERVICE_IP=$(kubectl get svc --namespace {{ .Release.Namespace }} {{ include "game2048.fullname" . }} --template "{{"{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}"}}")

echo http://$SERVICE_IP:{{ .Values.service.port }}

{{- else if contains "ClusterIP" .Values.service.type }}

export POD_NAME=$(kubectl get pods --namespace {{ .Release.Namespace }} -l "app.kubernetes.io/name={{ include "game2048.name" . }},app.kubernetes.io/instance={{ .Release.Name }}" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace {{ .Release.Namespace }} $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:80 to use your application"

kubectl --namespace {{ .Release.Namespace }} port-forward $POD_NAME 80:$CONTAINER_PORT

{{- end }}

pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: {{ .Values.pvc.name }}

namespace: {{ .Release.Namespace }}

spec:

accessModes: {{ .Values.pvc.accessModes }}

storageClassName: {{ .Values.pvc.storageClassName }}

resources:

requests:

storage: {{ .Values.pvc.storage }}

pvc.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ .Values.appName }}

namespace: {{ .Release.Namespace }}

labels:

{{- include "game2048.labels" . | nindent 4 }}

spec:

type: {{ .Values.service.type }}

ports:

- port: {{ .Values.service.port }}

targetPort: {{ .Values.service.port }}

protocol: TCP

name: http

selector:

{{- include "game2048.selectorLabels" . | nindent 4 }}

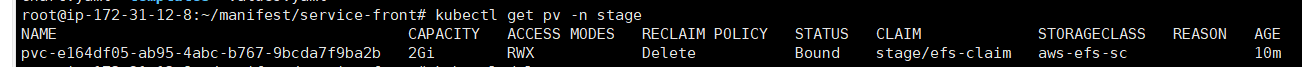

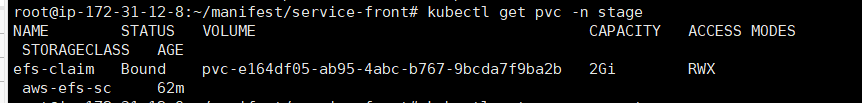

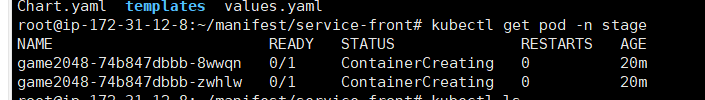

이전 생성하였던 EFS 는 보안 그룹 추가 지정 없이 EFS 이 생성된 후, pv,pvc,pod 가 문제 없이 잘 생성되었으나 추후, 파일을 변경하여 진행하였더니 pod Cotainercreating 상태가 지속됨

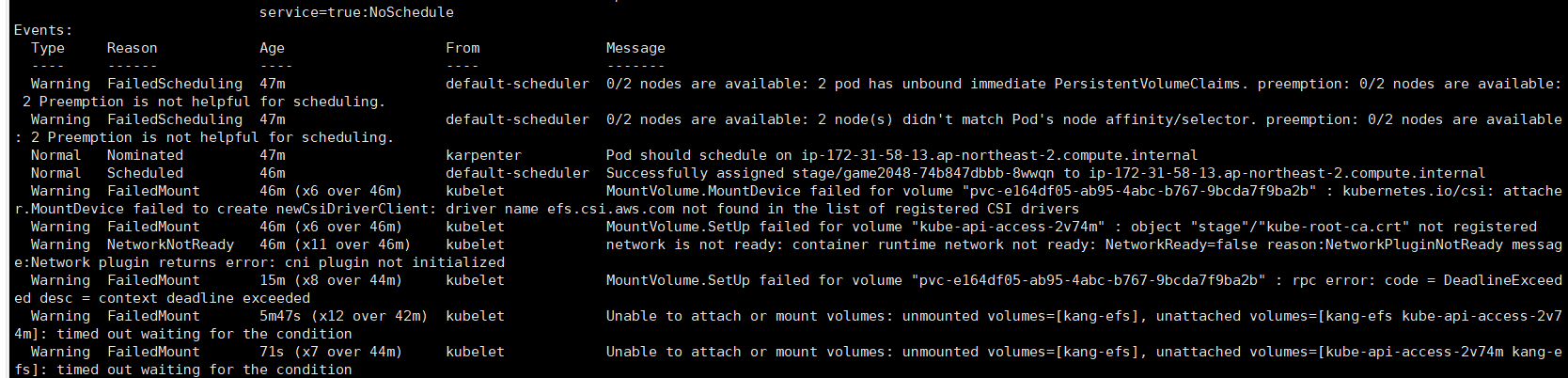

kubectl describe pod log 상태

efs 보안 그룹 내 eks-cluster 인바운드 규칙 추가

'AWS' 카테고리의 다른 글

| EKS Cloudwatch - Container Insight + Fluentd 설치 (0) | 2024.01.14 |

|---|---|

| CloudWatch Container Insight 설정 후, Whatap 및 slack 연동 + 알림 설정 (0) | 2024.01.11 |

| EKF EFS 구성 (0) | 2024.01.07 |

| EBS Volume 구성하여 mysql 설치 (1) | 2024.01.07 |

| eks resource 생성 실패 시, no task 오류 해결 (0) | 2024.01.05 |